Claude 3.7 Sonnet vs Gemini 2.5 Pro: Which AI Is Better for Coding?

If you’re a programmer, you’re likely already using AI and large language models in your workflow. For some time now, Claude Sonnet has been the undisputed king of models used for programming. It has earned the top spot in multiple code-focused benchmarks, such as the Aider LLM leaderboard and LiveBench.

But that dominance might be changing. Google’s Gemini 2.5 Pro has quickly overtaken the top spot from Sonnet 3.7 in many benchmarks. It also sports a 1 million token context window—about 4 times larger than what Sonnet 3.7 offers today.

Benchmark scores is one thing, but how do these models actually perform in real-world programming scenarios? I decided to put them to the test.

Prefer watching a video? See my live comparison between Claude 3.7 Sonnet and Gemini 2.5 Pro

The Testing Environment

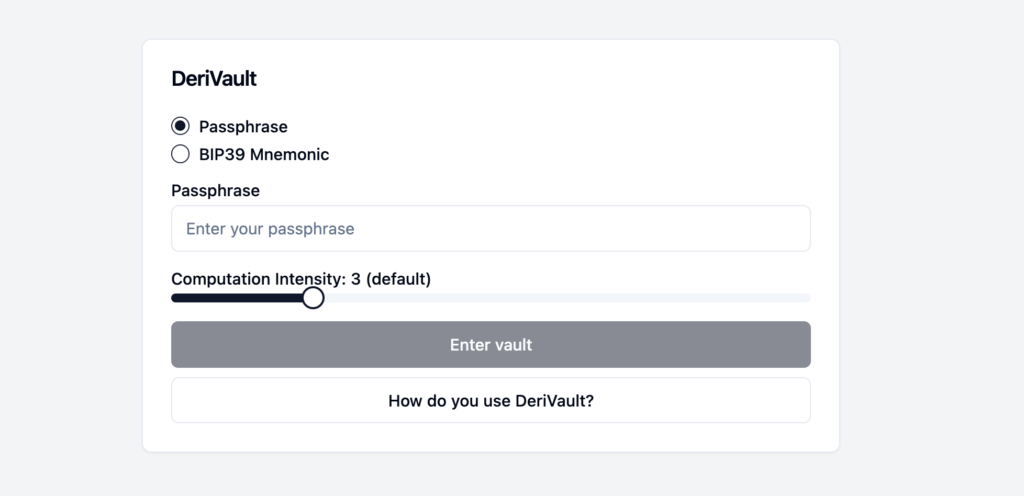

For this test, I used my password manager project called DeriVault – an offline-first deterministic password manager built with Svelte and SvelteKit. I did this because I wanted to see how well both models could work with a large, existing codebase.

I first used my ai-digest tool to convert the entire codebase into a single markdown file by running:

npx ai-digestThis generates a codebase.md file that I then uploaded to both services – in Claude Projects for Claude and AI Studio for Gemini.

Initial Impressions

I first asked both models to suggest improvements for the password manager. Claude immediately dove into suggesting features with implementation code, while Gemini took a more analytical approach, identifying strengths and weaknesses before categorizing its suggestions by importance.

Both models provided solid recommendations, but I found Claude’s suggestions slightly more aligned with the project’s philosophy, while Gemini’s suggestions sometimes felt a bit disconnected from the core concept.

The Real Test: Implementing Dark Mode

The main challenge was asking both models to implement dark mode for the entire application—a task I knew would require changes across dozens of files.

Surprisingly, both models successfully implemented working dark mode functionality, which I honestly didn’t expect.

Claude’s Implementation

Claude created a React-inspired “theme provider” pattern, which felt a bit over-engineered for a Svelte application. It also added theme toggles in both the main navigation and settings page, with extra descriptive text that didn’t match the existing UI patterns.

The implementation worked well, but I felt like it wasn’t as simple as it could be.

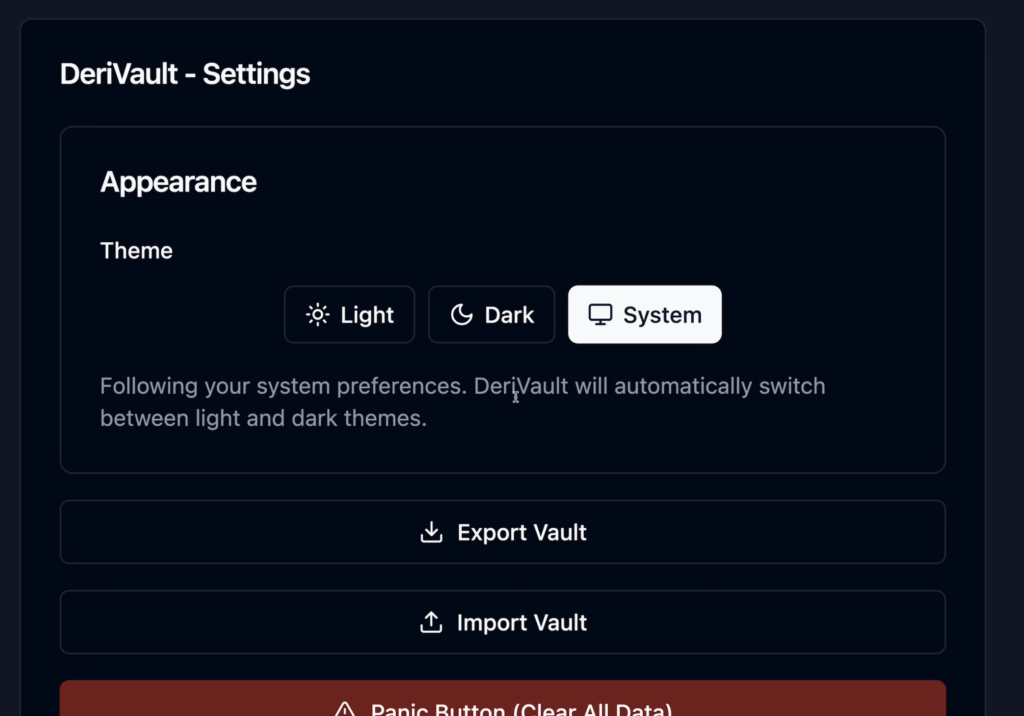

Gemini’s Implementation

Gemini’s solution was more streamlined. It placed the theme toggle only in settings. The code was cleaner overall and more aligned with Svelte patterns.

There was one minor drawback, which was that Gemini placed all dark mode code in the root layout, which isn’t ideal architecturally.

What Both Models Got Right

Impressively, both models did several SvelteKit-specific things correctly, such as correctly identifying that a naive implementation would cause a “flash of unstyled content”, and each implemented a SvelteKit-specific workaround using an earlier bootstrap file called app.html.

The Verdict

After thinking about it, I preferred Gemini’s output for this particular task. Its implementation was cleaner, more concise, and better aligned with Svelte patterns, despite a few architectural issues.

This is particularly impressive considering I was testing what I thought would be a worst-case scenario—asking an LLM to refactor and add dark mode to an entire app. The fact that both models succeeded was very impressive.

Conclusion

Given Gemini 2.5 Pro’s larger context window (4x Claude’s) and its impressive performance on this complex coding task, it’s becoming a very competitive option for developers working with large codebases. Keep in mind that there are some privacy implications with Googles solution, as Google trains on AI Studio inputs and outputs (something you should be able to work around by using Gemini Pro and/or Vertex AI, but I haven’t explored these options.)

I’ll be spending more time with Gemini in the future to see if this performance holds up across different types of projects and programming languages.

Have you tried both models for coding assistance? Which one worked better for your particular use case? Let me know in the comments below!

View Comments

Five Tips for Better AI Programming with Large Language Models

After using LLMs like ChatGPT, Claude and Gemini for coding assistance, I’ve...